PyTorch vs. TensorFlow – a detailed comparison

TensorFlow and PyTorch are two of the most popular frameworks for delivering machine learning projects. Both are great and versatile tools, yet there are several important differences between them, making them better suited for particular projects. So, what are the differences?

This text covers:

Also, there are multiple other frameworks to work with big data and machine learning. Is it fair to omit them in the comparison?

Machine learning frameworks landscape

Currently, there are at least 6 frameworks:

- Scikit-Learn

- TensorFlow

- PyTorch

- CAFFE

- Microsoft Cognitive toolkit

- Firebase

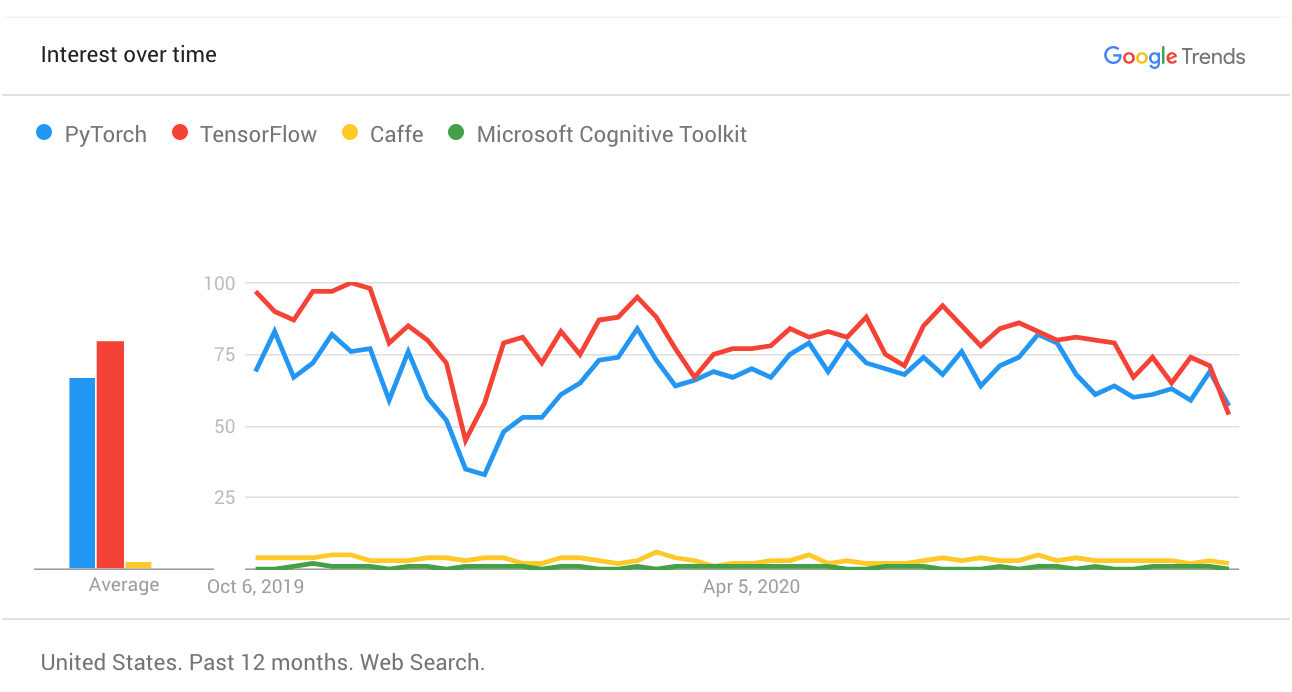

There is no direct way to compare them apart from their popularity in Google Search. Considering that:

The duopoly of Tensorflow and PyTorch is undoubtable – only these two make numbers big enough to be noticed by the search engine. You can see the Caffe and Microsoft Cognitive toolkits included in the graph, but the rest are not included due to the fact that the low number of searches monthly makes the comparison impossible.

So, there is PyTorch, there is TensorFlow and there is a big empty nothingness.

Keras vs PyTorch?

Until recently it was common to compare Keras and Pytorch while omitting the TensorFlow in the majority of articles and guides around the web. The reason behind that was simple – Keras is a high-level API that can be used “above TensorFlow” to access the functionality it provides without the need to chew into the more complicated aspects of the code.

Keras appeared to be so good that it was incorporated into the TensorFlow project and is currently an inherent part of it. Thus, now it is only about PyTorch vs TensorFlow comparison.

What is TensorFlow at a glance?

TensorFlow was initially developed by the Google Brain team. It is an open-source library used for numerical computations and large scale machine learning. Thus, it is used in multiple heavy-duty appliances.

The technology enables the developer to deliver a graph. A graph can also be considered one instance from a series of processing nodes. The high-level working environment works in Python and Nodes or tensors in TensorFlow and Python objects. On the other hand, though, the computations are done as high-performance C++ binaries.

What is PyTorch at a glance?

PyTorch is a Facebook-backed framework that is direct competition to TensorFlow, providing similar functionalities. The framework was developed by the Facebook AI Research Lab and is distributed on the BSD license as an open-source software.

The software is based on the Torch library that was initially developed using the Lua programming language. PyTorch leverages the popularity and flexibility of Python while keeping the convenience and functionality of the original Torch library.

Pytorch vs Tensorflow – a detailed comparison

From the non-specialist point of view, the only significant difference between PyTorch and TensorFlow is the company that supports its development. It is worth noting that the differences between the frameworks that were once very significant are now, in 2020, less and less pronounced, with both of them striving to keep up with the competition. Nonetheless, they are still far from identical.

In fact, there are several important differences between these frameworks that the data scientists and project managers need to know about.

Ease of use

TensorFlow used to be a lower-lever deep learning framework that became more friendly by introducing the Keras high-level API. Things changed significantly with TensorFlow 2.0, where Keras got incorporated into the core project.

From then on, both frameworks became user friendly and relatively easy to apply in daily jobs.

TensorFlow

TensorFlow is now much more friendly and convenient than it used to be. The framework includes both high-level APIs and more sophisticated tools for building complicated projects. Since the incorporation of Keras into the project, multiple redundancies and inconsistencies have been trimmed and the framework provides a stable and clean working environment.

When it comes to TensorFlow in daily work, the framework delivers a more concise, simpler API. This makes the project less bloated and the code more elegant. Usually, it would be the same amount of code. However, we can find some examples when TF is more precise.

PyTorch

PyTorch delivers a more flexible environment with the price of slightly reduced automation. In fact, the environment is a better pick for a team that has a deeper understanding of deep learning concepts and ideas behind commonly used algorithms.

The framework is more “Pythonic” in construction, so in fact, a data scientist who has greater skill in Python programming can leverage this skill to gain much more from the framework and use it in a more natural way.

But this flexibility comes with a price – this popular deep learning framework requires more lines of code to deliver a project. The differences can be significant – a simple training loop requires five lines of code in PyTorch and only one in TensorFlow.

Example:

Defining a training loop is an example of a use case where writing the code in PyTorch requires more lines of code. A typical example would be something like this:

Tensorflow with Keras:

PyTorch:

Graph definition

Graphs are essential when it comes to data science in the way roads are essential for traffic and transportation. Until recently, the approach toward graphs was the key and the most significant difference between TensorFlow and PyTorch. Now, it is not a big difference, as TF introduced dynamic computational graphs in eager mode and PyTorch introduced a static computational graph. So you can have both in TF and PyTorch, depending on what you need.

Yet the tradition stays strong and it is not easy to toss the legacy.

TensorFlow

The Classic TensorFlow graph was defined statically, so a data scientist (or any other user) got instant access to its outline before running the model. This allowed for a significant dose of control of the training process, the data flow, and the kind of data that got processed. Also, the graph in the TensorFlow could not be modified after compilation, so what came as an advantage in the design stage could be highly limiting later.

In Tensorflow 2.0, dynamic computational graphs were introduced in eager execution, which is available by default. The computational graph is built dynamically when variables are declared. It is run and provides output when the function is called.

PyTorch

PyTorch generates a dynamic graph on-the-go, delivering a Dynamic Computation Graph. So before the computations are running, there is no graph at all. You are also able to manipulate the graph on-the-go, which is very convenient for such deep learning architectures as recurrent neural networks, where you need variable-length inputs. It also makes the code quite easier to debug.

On the other hand, PyTorch now also allows for the building of a static computational graph, so you have both possibilities in the two frameworks.

Here is an example of a simple addition operation running in the graph (not much difference):

Tensorflow 2.0:

Pytorch:

Visualization

While programming is still about delivering the code, machine learning can be seen as data-centered programming. Having a convenient tool to deliver some visualizations can be a game-changer that eases the work of a data scientist, especially during parameter tweaking and the time-consuming training process.

TensorFlow

TensorFlow comes with a convenient and flexible TensorBoard dashboard dedicated to delivering visualizations. The tool has already gained recognition as a lightweight, versatile tool.

TensorBoard can be used as a personal (or an organization-wide) tool for tracking project-related metrics like accuracy and loss. More about the TensorBoard can be found on the project website.

PyTorch

There is no dedicated visualization tool for PyTorch. However, there are multiple external tools – and you can also use TensorBoard, which works just fine.

Research use

While the extreme flexibility comes with a price, it is an asset hard to overlook when it comes to the research work. As the core of research is to transgress the boundaries of what is done “in the usual ways” and try new techniques.

TensorFlow

TensorFlow delivers a stable and legible environment, yet it lacks the flexibility required in experimental projects. Changing some of the core blocks in the training loop might require deeper digging into the code and getting to know the official documentation well.

PyTorch

PyTorch is dominant when it comes to delivering all the research work and saying that the latest advancements in AI and ML have been done in PyTorch is fair. The framework is structured in such a way that it is extremely easy to modify or create new classes that extend with new functionalities.

The majority of the leading scientific conferences with papers published have the supporting code written in PyTorch. Sorry, TensorFlow, not this time.

Deployment production use

The world of research papers is fascinating and alluring, yet academia can be far from everyday normal work. And, in the end, this day-to-day labor delivers a better life for everyone, be that a recommendation engine used by VOD platform or AI image recognition in a camera.

TensorFlow

The predictability, readability, and stability of TensorFlow-based models make them a better pick for the production and business-related applications and business-oriented model deployment.

Also, TensorFlow is easier to run in the cloud. Delivering AI-based solutions in the cloud environment can be considered a standard approach when it comes to building modern, business-oriented Artificial Intelligence solutions. While academia has access to on-prem supercomputers and research that can be done on particular, cluster-based machines, businesses enjoy the convenience and scalability of cloud computing. There are specially designed tools and instances on both Amazon Sagemaker (e.g Tensorflow Estimator) and Google Cloud Platform (TensorFlow Cloud) to make working with TensorFlow especially easy.

Tensorflow offers TensorFlow-Serve, which allows you to seamlessly deploy your model to production and easily manage different versions.

Furthermore, it is easy to deploy TensorFlow models on lightweight platforms such as mobile or IoT devices with Tensorflow Lite, which allows for lighter model implementation without much loss in model accuracy.

And last but not least, TensorFlow is easier to run on Tensor Processing Unit (TPU), a Google-designed chip that serves solely machine learning purposes. It can be considered as rocket fuel for a model and using it on TensorFlow comes much easier.

PyTorch

PyTorch on the other hand, is a great piece of software, yet it enjoys not even a half of the perks delivered by TensorFlow. So in fact, TensorFlow shines when it comes to delivering production-oriented software.

To keep up with Tensorflow-Serve, PyTorch has recently (June 2020) introduced its own solution, PyTorch-Serve, but this package does not yet provide the full functionality and ease of use of TensorFlow-Serve. Similarly, they also have PyTorch Mobile under development, which is not as popular or versatile as Tensorflow Lite.

Popularity and access to learning resources

Both projects enjoy legible documentation and a large base of users. Both have enthusiasts and haters, both come with large groups of users to come to for advice. The TensorFlow crowd is bigger and is more industry/production focused, whereas the PyTorch crowd is more research-oriented.

In fact, when it comes to popularity and learning resources, there are no significant differences.

Debugging, introspection, and code review

Even the best project comes to the point of debugging. So is there any significant difference between PyTorch and TensorFlow when it comes?

TensorFlow

TensorFlow enjoys all the perks of the clear design that it forces. Thus, it is easier to check for bugs and mistakes in simple networks, which are common in less sophisticated business solutions. It is a reliable tool to deliver a standard set of code and run it to deliver a certain result.

What can be surprising, considering the stability and reliability of TensorFlow as seen above, the framework comes with a debugger (precisely dubbed “tfdbg”) from hell. Thus, working with a larger and more complex project can be challenging, if not painstaking.

Also, TensorFlow comes with annoying memory management capabilities. By default, TensorFlow reserves all available memory on the GPU so you won’t be able to run another process on the same GPU, even if it is not being actively used. It can be manually managed, but the process is annoying at best and frustrating at worst.

PyTorch

With all the flexibility and freedom that comes with using PyTorch, it is quite understandable that the framework needs a reliable and convenient debugger. And that’s the point – PyTorch is a better pick when it comes to delivering a sophisticated and complex neural network, where deeply hidden bugs can ruin weeks of work. Debugging in PyTorch is very easy with standard Python tools such as pdb.

Conclusion – which is better – PyTorch or TensorFlow

Both TensorFlow and PyTorch are great tools that make data scientist’s lives easier and better. When it comes to picking the better one, it is not about the first or the second one. It is about the desired effect to be delivered.

Both frameworks come with pros and cons. With great developers working on both sides, it is only natural that both frameworks only get better and improve upon their shortcomings, making the comparison more difficult and their differences less pronounced. So, when asking the question “which one to pick?” the best answer is “the one that suits the project best.”

Finally, both tools don’t live in a vacuum. They constantly impact each other, mimic their most convenient solutions while tossing out obsolete ones. Thus, it is probable that in the near future both will be similar to the point of indistinguishability. So, in fact, soon there may be no “which is better – TensorFlow or PyTorch” – like questions.

If you wish to talk about which one is better for your project or how to solve your problems with our AI expertise, don’t hesitate to contact us now!

OhNoCrypto

via https://www.ohnocrypto.com

, @KhareemSudlow